How we are teaching our AI engine to turn articles into videos

7 Feb 2025

What started as a one simple sentence became a complex sequence.

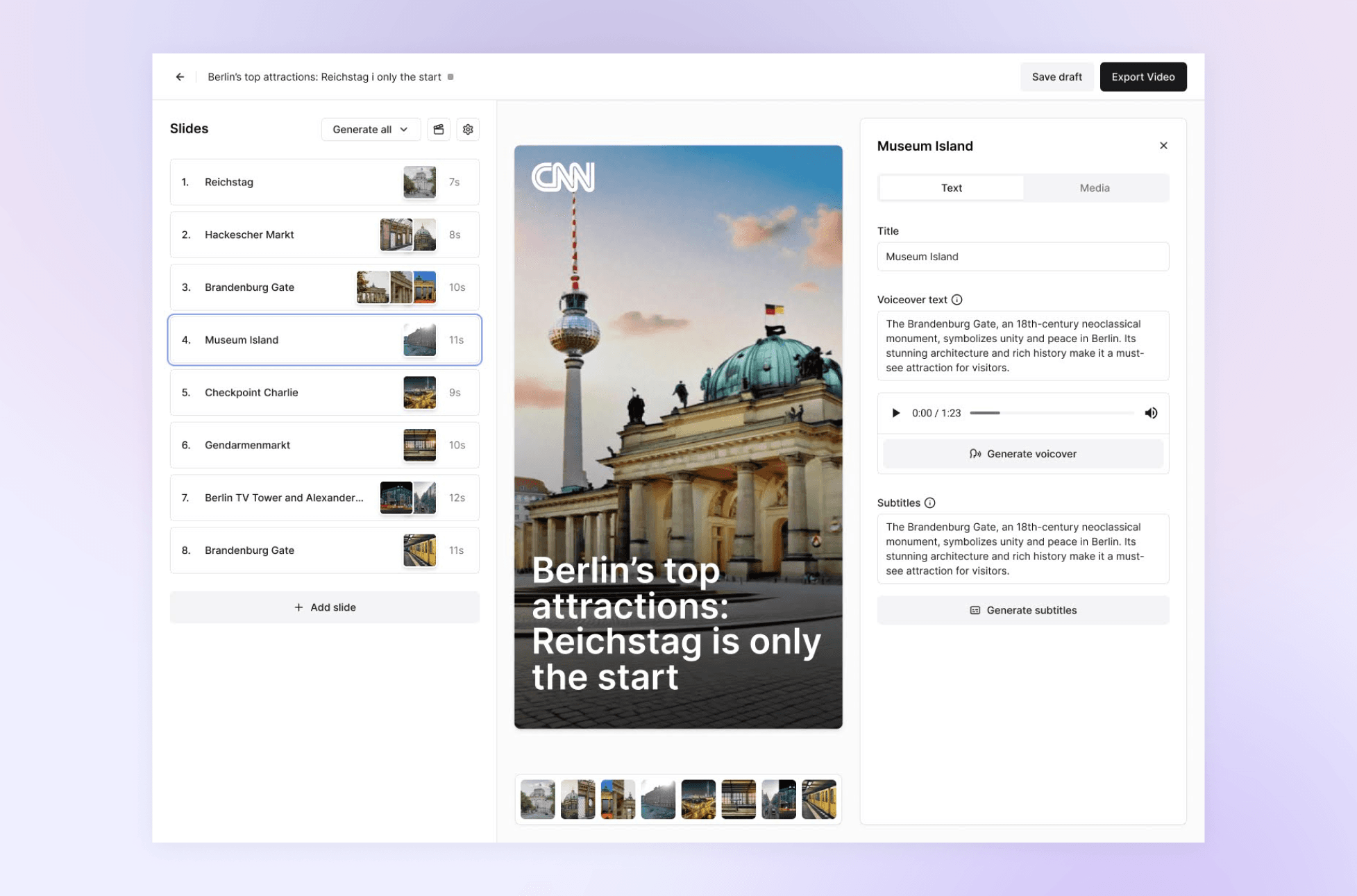

When we started working on Flipatic, our new solution for transforming newsroom articles into videos, we thought we were solving a straightforward problem: automating the process of adapting written news into engaging video content.

What we discovered was something far more complex.

Different types of content – news articles, interviews, opinion pieces – required a unique approach. AI needed much more than a simple summarization prompt to create structured, meaningful, and engaging content.

Here’s how we tackled the challenges, what we learned along the way, and why Flipatic is becoming more than just an automation tool.

Meet Flipatic: A solution for newsrooms that turns articles into videos within minutes

Prompting: from one sentence to 1.5 pages

At first, we consciously deprioritized perfect prompt engineering. We knew that Flipatic had more pressing technical challenges to solve first.

So, we started with one simple sentence as our initial prompt – just enough to create a proof of concept (PoC).

The sentence was: "Create video scenario based on provided topic".

Once we built the foundation, it caught up with us – as expected. When we pieced together different components, the results weren’t satisfactory. That’s when we stepped back to rework the prompt.

What started as a single sentence expanded into 1.5 pages of structured instructions, breaking the task into a sequence of smaller, manageable steps.

The instructions are divided into sections, such as article type, video type, length & format, title, quoting, output format, and additional guidelines. Each section serves a distinct purpose, containing specific commands related to scenario construction. These instructions function almost like variables in programming, allowing for precise customization based on the publisher’s needs, content, style, and language. Whether the tone needs to be serious and factual or lighthearted and engaging, the prompt adapts accordingly.

This approach follows the logic of chain prompting, where a complex task is broken down into a step-by-step sequence of interconnected prompts, ensuring that each stage builds upon the previous one.

Challenges we solved with chain prompting

By chain prompting we understand a structured sequence where each phase refines the previous one. This means:

- Processing content incrementally rather than making all decisions at once;

- Ensuring each step builds upon the last, reducing errors and inconsistencies;

- Improving accuracy, coherence, and storytelling flow without sacrificing efficiency.

Chain prompting helped us in:

Quotes: Flipatic now identifies and formats direct quotes correctly rather than paraphrasing them.

Numerals: Flipatic now prevents inconsistencies, ensuring numbers remain formatted correctly instead of being converted into words.

We also introduced a new validation step – Flipatic not only generates content but also checks its own work. After completing a task, we prompt AI to review its output, catch inconsistencies, and refine the final result.

What’s important, we treat the prompt not as a static piece of text – it’s a living system that keeps evolving. That’s why we built a prompt management system using Langfuse. This allows us to compare different prompt versions, observe how Flipatic behaves with changes, and revert to previous versions if needed.

Langfuse gives us the flexibility to test, iterate, and adjust Flipatic’s performance continuously – essential in an industry where AI models are evolving rapidly.

Example 1: teaching AI to structure the news

Flipatic is designed to convert written news articles into videos – and we are developing it to suit newsrooms’ needs. What’s important here is that news articles follow clear journalistic principles—they present the most important details first, provide a structured chronology, and maintain an objective tone. And this is why converting them into compelling videos was anything but simple.

To generate high-quality video scripts, we developed a multi-step process that guides AI through understanding the story. We needed AI to be able to extract the core event and key details of the news, separate facts from opinions, and match the visuals with content.

What was also crucial:

Clickbait control – We treat clickbait intensity as a variable within the prompt. By default, Flipatic maintains a neutral, balanced tone, but we recognize that some publishers have a naturally high-impact, tabloid editorial style. With the right adjustments, Flipatic can adapt to that tone, mimicking the level of urgency or emotion their audience expects—while still staying within the boundaries of credibility.

Avoiding redundancy – By grouping similar details instead of repeating them across slides, the content remains sharp and concise.

Preserving context – Where relevant, Flipatic includes brief historical background or external references without overwhelming the audience.

Example 2: Interviews

Unlike news articles, interviews are dynamic, multi-threaded, and filled with subtle exchanges that don’t fit neatly into a chronological format. They don’t follow a straight path – topics resurface, questions overlap, and statements gain meaning only in context. A simple summarization approach often resulted in confusing, out-of-context snippets. So, instead of paraphrasing chronologically, we trained Flipatic to track themes across the conversation, ensuring cohesion rather than fragmented responses.

To preserve authenticity, we introduced “quote slides”–key moments displayed as text against a styled background, preventing unintended AI rewording.

Since interviews can be long (and we need to keep the viewer engaged), we introduced a cap on video length for optimal engagement, and prioritize most compelling moments.

Flipatic also ensures every interview starts by clearly introducing who is speaking and why they matter before diving into responses.

To refine this process, we consulted experienced journalists, ensuring that Flipatic structures interview-based content according to industry standards.

Here's an example of an intervie (in Polish)

What’s coming next?

As you can see, Flipatic isn’t just about speeding up video production – we really care about maintaining editorial integrity and closing the gap between automation and human oversight. We want to ensure that the content it produces is clear, engaging, and structured, but also faithful to its source material.

In the near future… We plan to continue combining multiple AI models (Flipatic now integrates Claude AI and OpenAI) and using different models for different types of content, ensuring backup solutions when needed.

We will also continuously iterate the versions of our tool, monitor it closely and adapt to publishers’ needs. We know this work will never be fully finished – AI models are evolving, weaknesses disappear but new challenges emerge.

What we can say for sure is that we are committed to adapting and improving Flipatic – AI technology will keep changing, and so will we.

We’re currently running pilots with selected publishers. If you’d like to explore AI-powered video automation, let’s talk.